Chatbots are hot stuff right now, and ChatGPT is chief among them. But thanks to how powerful and humanlike its responses are, academics, educators, and editors are all dealing with the rising tide of AI-generated plagiarism and cheating. Your old plagiarism detection tools may not be enough to sniff out the real from the fake.

In this article, I talk a little about this nightmarish side of AI chatbots, check out a few online plagiarism detection tools, and explore how dire the situation has become.

Lots of detection options

The latest November 2022 release of startup OpenAI’s ChatGPT basically thrusted chatbot prowess into the limelight. It allowed any regular Joe (or any professional) to generate smart, intelligible essays or articles, and solve text-based mathematic problems. To the unaware or inexperienced reader, the AI-created content can quite easily pass as a legit piece of writing, which is why students love it — and teachers hate it.

A great challenge with AI writing tools is their double-edged sword ability to use natural language and grammar to build unique and almost individualized content even if the content itself was drawn from a database. That means the race to beat AI-based cheating is on. Here are some options I found that are available right now for free.

GPT-2 Output Detector comes straight from ChatGPT developer OpenAI to demonstrate that it has a bot capable of detecting chatbot text. Output Detector is easy to use — users just have to enter text into a text field and the tool will immediately provide its assessment of how likely it is that the text came from a human or not.

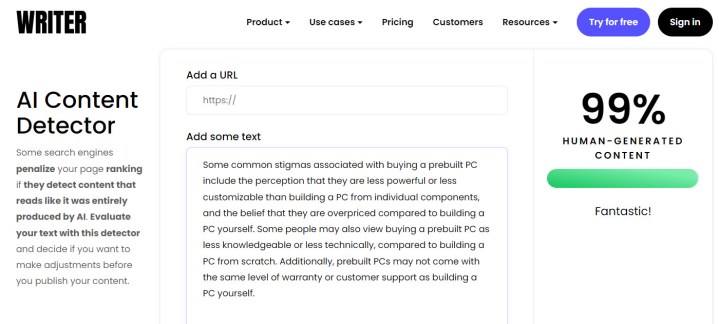

Two more tools that have clean UIs are Writer AI Content Detector and Content at Scale. You can either add a URL to scan the content (writer only) or manually add text. The results are given a percentage score of how likely it is that the content is human-generated.

GPTZero is a home-brewed beta tool hosted on Streamlit and created by Princeton University student Edward Zen. It’s differs from the rest in how the “algiarism” (AI-assisted plagiarism) model presents its results. GPTZero breaks the metrics into perplexity and burstiness. Burstiness measures overall randomness for all sentences in a text, while perplexity measures randomness in a sentence. The tool assigns a number to both metrics — the lower the number, the greater possibility that the text was created by a bot.

Just for fun, I included Giant Language Model Test Room (GLTR), developed by researchers from the MIT-IBM Watson AI Lab and Harvard Natural Language Processing Group. Like GPTZero, it doesn’t present its final results as a clear “human” or “bot” distinction. GLTR basically uses bots to identify text written by bots, since bots are less likely to select unpredictable words. Therefore, the results are presented as a color-coded histogram, ranking AI-generated text versus human-generated text. The greater the amount of unpredictable text, the more likely the text is from a human.

Putting them to the test

All these options might make you think we’re in a good spot with AI detection. But to test the actual effectiveness of each of these tools, I wanted to try it out for myself. So I ran a couple of sample paragraphs that I wrote in response to questions that I also posed to, in this case, ChatGPT.

My first question was a simple one: Why is buying a prebuilt PC frowned upon? Here’s how my own answers compared to the response from ChatGPT.

| My real writing | ChatGPT | |

| GPT-2 Output Detector | 1.18% fake | 36.57% fake |

| Writer AI | 100% human | 99% human |

| Content at Scale | 99% human | 73% human |

| GPTZero | 80 perplexity | 50 perplexity |

| GLTR | 12 of 66 words likely by human | 15 or 79 words likely by human |

As you can see, most of these apps could tell that my words were genuine, with the first three being the most accurate. But ChatGPT fooled most of these detector apps with its response too. It scored a 99% human on the Writer AI Content Detector app, for starters, and was marked just 36% fake by GPT-based detector. GLTR was the biggest offender, claiming that my own words were equally likely to be written by a human as ChatGPT’s words.

I decided to give it one more shot, though, and this time, the responses were significantly improved. I asked ChatGPT to provide a summary of the Swiss Federal Institute of Technology’s research into anti-fogging using gold particles. In this example, the detector apps did a much better job at approving my own response and detecting ChatGPT.

| My real writing | ChatGPT | |

| GPT-2 Output Detector | 9.28% fake | 99.97% fake |

| Writer AI | 95% human | 2% human |

| Content at Scale | 92% human | 0% (Obviously AI) |

| GPTZero | 41 perplexity | 23 perplexity |

| GLTR | 15 of 79 words likely by human | 4 of 98 words likely by human |

The top three tests really showed their strength in this response. And while GLTR still had a hard time seeing my own writing as human, at least it did a good of catching ChatGPT this time.

Closing

It’s obvious from the results of each query that online plagiarism detectors aren’t perfect. For more complex answers or pieces of writing (such as in the case of my second prompt), it’s a bit easier for these apps to detect the AI-based writing, while the simpler responses are much more difficult to deduce. But clearly, it’s not what I’d call dependable. Occasionally, these detector tools will misclassify articles or essays as ChatGPT-generated, which is a problem for teachers or editors wanting to rely on them for catching cheaters.

Developers are constantly fine-tuning accuracy and false positive rates, but they’re also bracing for the arrival of GPT-3, which touts a significantly improved dataset and more complex capabilities than GPT-2 (of which ChatGPT is trained from).

At this point, in order to identify content generated by AIs, editors and educators will need to combine judiciousness and a little bit of human intuition with one (or more) of these AI detectors. And for chatbot users who have or are tempted to use chatbots such as Chatsonic, ChatGPT, Notion, or YouChat to pass of their “work” as legit — please don’t. Repurposing content created by a bot (that sources from fixed sources within its database) is still plagiarism no matter how you look at it.

Editors' Recommendations

- Bing Chat just beat a security check to stop hackers and spammers

- This powerful ChatGPT feature is back from the dead — with a few key changes

- Meta is reportedly working on a GPT-4 rival, and it could have dire consequences

- Zoom adds ChatGPT to help you catch up on missed calls

- ChatGPT is violating your privacy, says major GDPR complaint