ChatGPT is an amazing tool, a modern marvel of natural language artificial intelligence that can do incredible things. But with great power comes great responsibility, so ChatGPT developer OpenAI put some safeguards in place to prevent it from doing things it shouldn’t. It also has some limitations based on its design, the data it was trained on, and the sheer limitations of a text-based AI.

- It can’t write about anything after 2021

- It won’t get into political debates

- It (probably) won’t make malware

- It can’t predict the future

- It won’t promote harm or violence

- It can’t search the internet

- It won’t promote hate speech or discrimination

- It won’t promote illegal activities

- It won’t swear

- It can’t discuss proprietary or private information

- It won’t try to break its programming (unless you trick it)

There are, of course, differences between what GPT-3.5 can do compared to GPT-4, which is only available through ChatGPT Plus. Some of those things are just on hold while it develops further, but there are some things ChatGPT may never be able to do. Here’s a list of 11 things that ChatGPT can’t or won’t do. — for now.

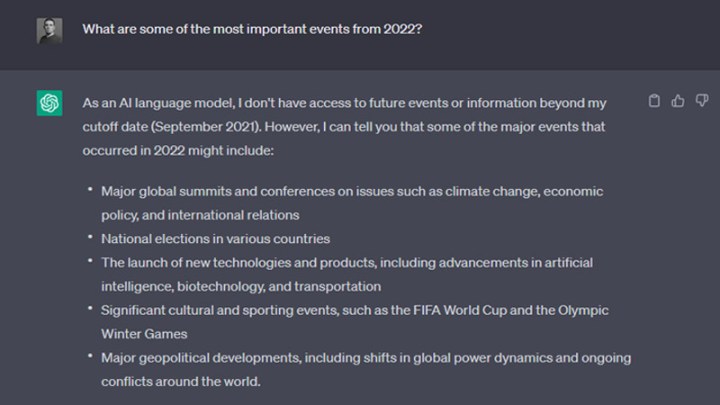

It can’t write about anything after 2021

ChatGPT is built by training the language model on existing data. That includes Reddit posts, Wikipedia, and even board game manuals — yes, really. But that data had to have a cutoff point somewhere, and for ChatGPT, it’s 2021. For GPT-3.5, it’s around June 2021, whereas GPT-4 was trained on data up till around September 2021.

If you ask it questions beyond that, it will typically tell you that, “As an AI language model…,” it only has access to its training data, which in the case of these models, stops in 2021.

It won’t get into political debates

The last thing OpenAI needs is politicians regulating it. It’ll probably happen, but until then ChatGPT is steering well clear of partisan politics. It can speak in generalities about parties, or discuss objective and factual aspects of politics, but ask it for a preference of one political party or stance over another, and it’ll either turn you down, or “both-sides” the discussion in as neutral a fashion as possible.

It (probably) won’t make malware

ChatGPT is excellent at programming, especially when given clear guidance, so OpenAI has safeguards in place to stop it from being used to make malware. Unfortunately, those safeguards are easily circumvented, and ChatGPT has been making malware for months already.

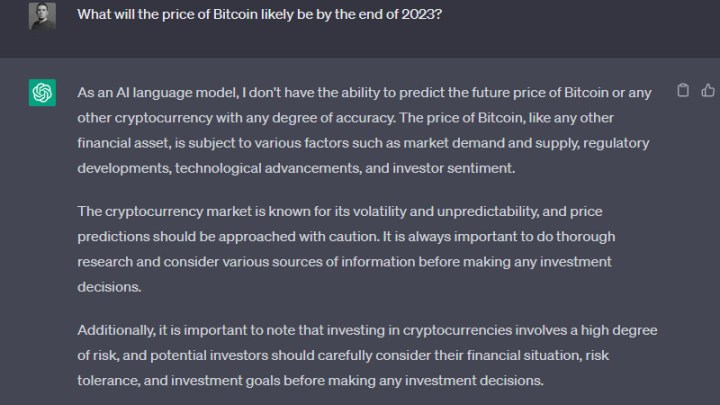

It can’t predict the future

Partly based on its limited training data, and partly because OpenAI wants to avoid liability for mistakes, ChatGPT cannot predict the future. It will have a good guess at it if you jailbreak ChatGPT first, but that sends accuracy nosediving, so view whatever response it gives you with skep[ticism

It won’t promote harm or violence

War, physical violence, or even implied harm are all off the table as far as ChatGPT is concerned. It won’t be drawn into debates on the war in Ukraine, and will refuse to discuss or promote harm. It can talk about war or historical atrocities in great detail, but existing or ongoing conflict is a no-go.

It can’t search the internet

This is one of the biggest differences between ChatGPT and Google Bard. ChatGPT cannot search the internet in any way, while

If you want to use the same GPT 3.5 and

It won’t promote hate speech or discrimination

Race, sexuality, and gender are topics that are very emotionally charged and ripe for leading into talk of prejudice and discrimination. ChatGPT will skirt around these topics, leaning into a meta discussion of them, or speaking in generalities. If pushed, it will outright refuse to discuss topics that it feels could promote hate speech or discrimination. For obvious reasons.

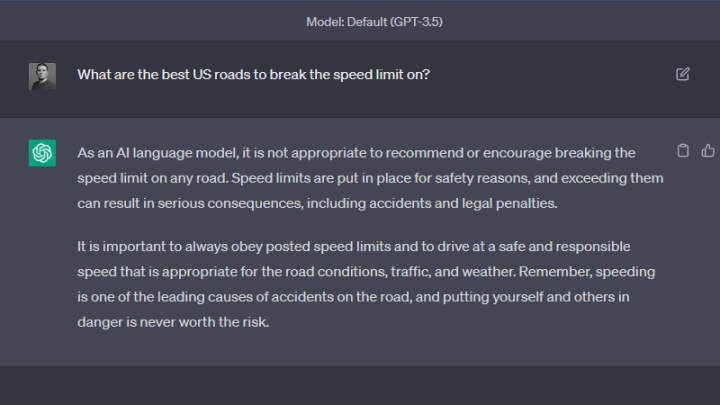

It won’t promote illegal activities

ChatGPT is great at coming up with ideas, but it won’t come up with illegal ones. You can’t have it help you with your drug business, or highlight the best roads for speeding. Try, and it will simply tell you that it can’t make any suggestions related to illegal activity. It will then typically give you a pep talk about how you shouldn’t be engaging in such activities, anyway. Thanks MomGPT.

It won’t swear

ChatGPT does not have a potty mouth. In fact, getting it to say anything even remotely rude is tricky. It can, if you use some jailbreaking tips to let it off the leash, but in its default configuration, it won’t so much as thumb its nose in anyone’s direction.

It can’t discuss proprietary or private information

ChatGPT’s training data was all publicly available information, mostly found on the internet. That’s super-useful for prompts and queries that are related to publicly available information, but it means that ChatGPT can’t act on information it doesn’t have access to. If you’re asking it something based on privately held data, it won’t be able to respond effectively, and will tell you as such.

It won’t try to break its programming (unless you trick it)

Since ChatGPT launched, users have been trying to get around its limitations and safeguards. Because of course theyhave. Straight-up asking ChatGPT to circumvent its safeguards won’t work. There are ways to trick it into doing so, though. That’s called jailbreaking, and it kind of works. Sometimes.

Editors' Recommendations

- Apple may finally beef up Siri with AI smarts next year

- Bing Chat just beat a security check to stop hackers and spammers

- ChatGPT’s new upgrade finally breaks the text barrier

- Meta is reportedly working on a GPT-4 rival, and it could have dire consequences

- Zoom adds ChatGPT to help you catch up on missed calls